If your organization is adding generative AI into its processes, you’ve probably hit the same wall as everyone else: “How do we govern this responsibly?”

It’s one thing to get a large language model (LLM) to generate a summary, write an email, or classify a support ticket. It’s another entirely to make sure that use of AI fits your company’s legal, ethical, operational, and technical policies. That’s where governance comes in—and frankly, it’s where most organizations are struggling to find their footing.

The challenge isn’t just technical. Sure, you need to worry about prompt injection attacks, hallucinations, and model drift. But you also need to think about compliance audits, cost control, human oversight, and the dreaded question from your CEO: “Can you explain why the AI made that decision?” These aren’t abstract concerns anymore—they’re real business risks that can derail AI initiatives faster than you can say “responsible deployment.”

That’s where Camunda comes into the picture. We’re not an AI governance platform in the abstract sense. We don’t decide your policies for you, and we’re not going to tell you whether your use case is ethical or compliant. But what we do provide is something absolutely essential: a controlled environment to integrate, orchestrate, and monitor AI usage inside your processes, complete with the guardrails and visibility that support enterprise-grade governance.

Think of it this way: if AI governance is about making sure your organization uses AI responsibly, then Camunda is the operational backbone that makes those policies actually enforceable in production systems. We’re the difference between having a beautiful AI ethics document sitting in a SharePoint folder somewhere and actually implementing those principles in your day-to-day business operations.

This post will explore how Camunda fits into the broader picture of AI governance, diving into specific features—from agent orchestration to prompt tracking—that help you operationalize your policies and build trustworthy, compliant automations.

What is AI governance, and where does Camunda fit?

Before we dive into the technical details, it’s worth stepping back and talking about what AI governance actually means. The term gets thrown around a lot, but in practice, it covers everything from high-level ethical principles to nitty-gritty technical controls.

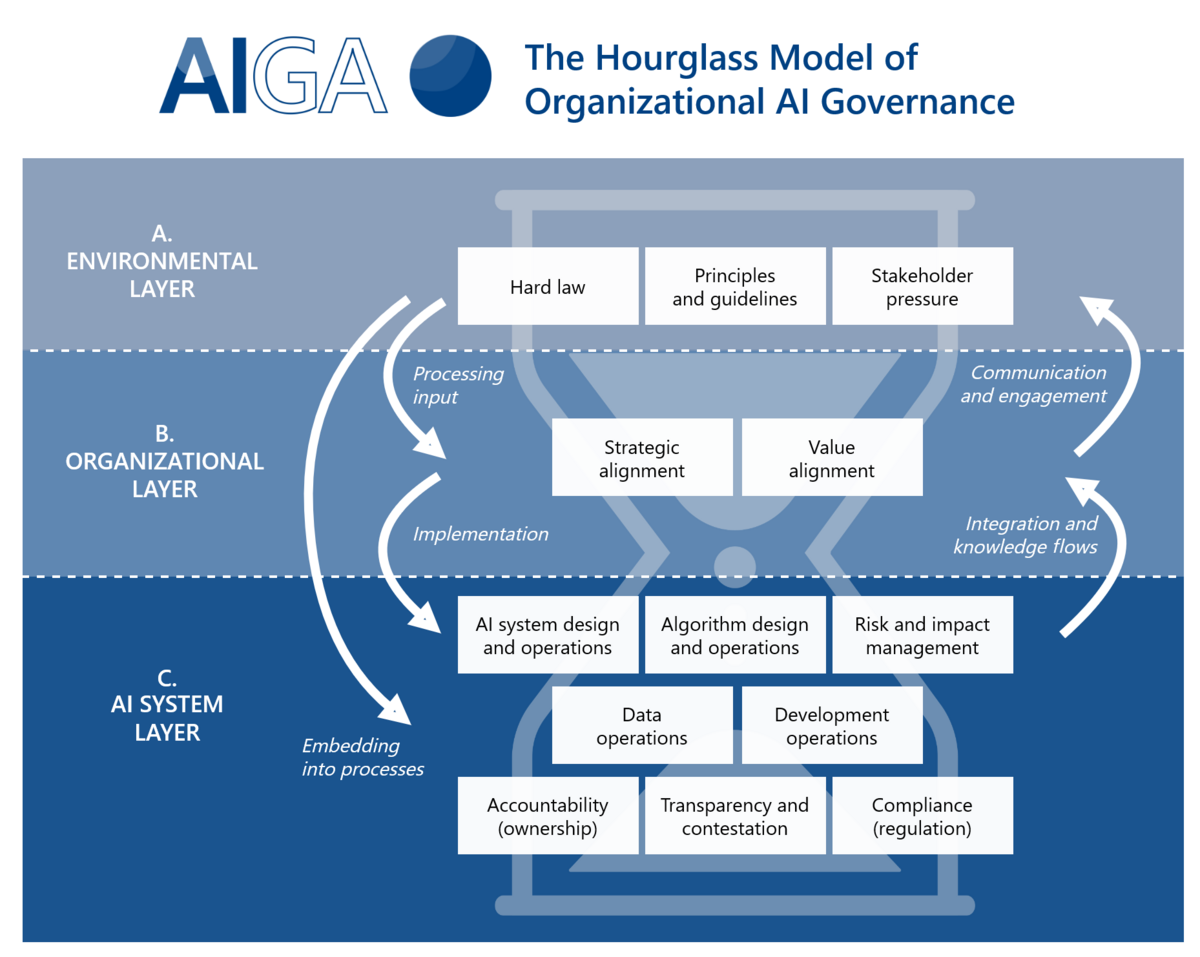

We’re framing this discussion around the “AI Governance Framework” provided by ai-governance.eu, which defines a comprehensive model for responsible AI oversight in enterprise and public-sector settings. The framework covers organizational structures, procedural requirements, legal compliance, and technical implementations.

Camunda plays a vital role in many areas of governance, but none more so than the “Technical Controls (TeC)” category.. This is where the rubber meets the road—where your governance policies get translated into actual system behaviors. Technical controls include enforcing process-level constraints on AI use, ensuring explainability and traceability of AI decisions, supporting human oversight and fallback mechanisms, and monitoring inputs, outputs, and usage metrics across your entire AI ecosystem.

Here’s the crucial point: these technical controls don’t replace governance policies—they ensure that those policies are actually followed in production systems, rather than just existing as aspirational documents that nobody reads.

1. Fine-grained control over how AI is used

The first step to responsible AI isn’t choosing the right model or writing the perfect prompt—it’s being deliberate about when, where, and how AI is used in the first place. This sounds obvious, but many organizations end up with AI sprawl, where different teams spin up AI integrations without any coordinated approach to governance.

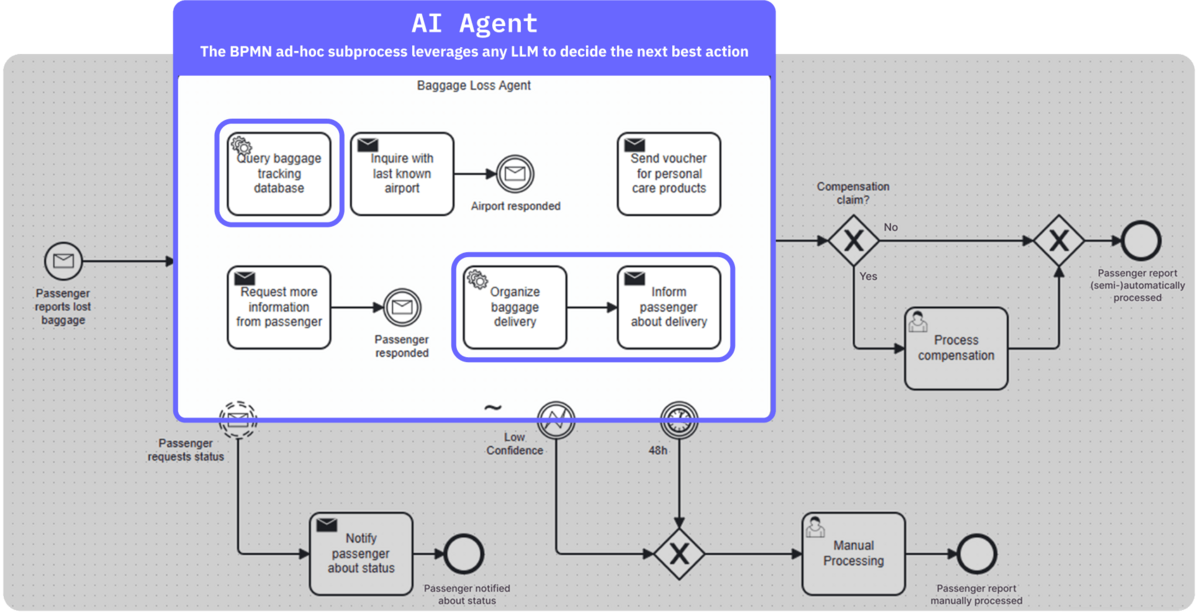

With Camunda, AI usage is modeled explicitly in BPMN (Business Process Model and Notation), which means every AI interaction is part of a documented, versioned, and auditable process flow.

You can design processes that use Service Tasks to call out to LLMs or other AI services, but only under specific conditions and with explicit input validation. User Tasks can involve human reviewers before or after an AI step, ensuring critical decisions always have human oversight. Decision Tables (DMN) can evaluate whether AI is actually needed based on specific inputs or context. Error events and boundary events capture and handle failed or ambiguous AI responses, building governance directly into your process logic.

Because the tasks executed by Camunda’s AI agents are defined with BPMN, those tasks can be deterministic workflows themselves, ensuring that, on a granular level, execution is still predictable.

This level of orchestration lets you inject AI into your business processes on your own terms, rather than letting the AI system dictate behavior. You’re not just calling an API and hoping for the best—you’re designing a controlled environment where AI operates within explicit boundaries.

Here’s a concrete example: if you’re processing insurance claims and want to use AI to classify them as high, medium, or low priority, you can insert a user task to verify all “high priority” classifications before they get routed to your fraud investigation team. You can also add decision logic that automatically escalates claims above a certain dollar amount, regardless of what the AI thinks. This way, you keep humans in the loop for critical decisions without slowing down routine processing.

2. Your models, your infrastructure, your rules

One of the most frequent concerns about enterprise AI adoption centers on data privacy and vendor risk. Many organizations have strict requirements that no customer data, internal business logic, or proprietary context can be sent to third-party APIs or cloud-hosted LLMs.

Camunda’s approach to agentic orchestration supports complete model flexibility without sacrificing governance capabilities. You can use OpenAI, Anthropic, Mistral, Hugging Face, or any provider you choose, and, starting with Camunda 8.8 (coming in October 2025), you can also route calls to self-hosted LLMs running on your own infrastructure. Whether you’re running LLaMA 3 on-premises, using Ollama for local development, or connecting to a private cloud deployment, Camunda treats all of these as different endpoints in your process orchestration.

There’s no “magic” behind our AI integration—we provide open, composable connectors and SDKs that integrate with standard AI frameworks like LangChain. You control the routing logic, prompt templates, authentication mechanisms, and access credentials. Most importantly, your data stays exactly where you want it.

For example, a financial services provider might route customer account inquiries to a cloud-hosted model, but keep transaction details and personal financial information on-premises. With Camunda, you can model this routing logic explicitly using decision tables to determine which endpoint to use based on content and context.

3. Design AI tasks with guardrails: Preventing prompt injection and hallucinations

Prompt injection isn’t just a theoretical attack—it’s a real risk that can have serious business consequences. Any time an AI model processes user-generated input, there’s potential for malicious content to manipulate the model’s behavior in unintended ways.

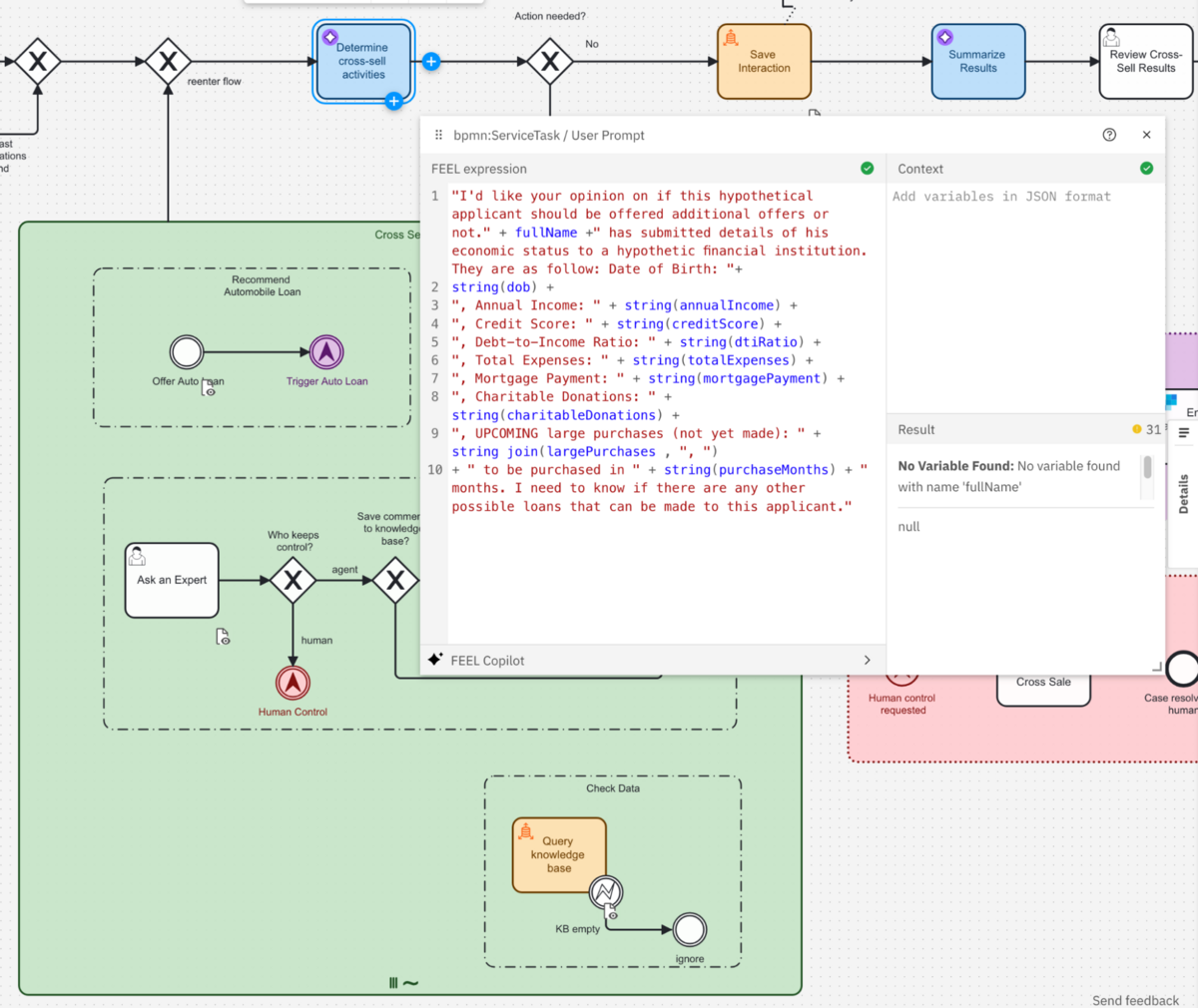

Camunda helps mitigate these risks by providing structured approaches to AI integration. All data can be validated and sanitized before it is used in a prompt, preventing raw input from reaching the models. Prompts are designed using FEEL (Friendly Enough Expression Language), allowing prompts to be flexible and dynamic. This centralized prompt design means prompts become part of your process documentation rather than buried in application code. Camunda’s powerful execution listeners can be utilized to analyze and sanitize the prompt before it is sent to the agent.

Decision tables provide another layer of protection by filtering or flagging suspicious content before it reaches the model. You can build rules that automatically escalate requests containing certain keywords or patterns to human review.

When you build AI tasks with Camunda’s orchestration engine, you create a clear separation between the “business logic” of your process and the “creative output” of the model. This separation makes it much easier to test different scenarios, trace unexpected behaviors, and implement corrective measures. Camunda’s AI Task Agent supports guardrails, such as limiting the number of iterations it can perform, or the maximum number of tokens per request to help control costs.

4. Monitoring and auditing AI activity

You can’t govern what you can’t see. This might sound obvious, but many organizations deploy AI systems with minimal visibility into how they’re actually being used in production.

Optimize gives you comprehensive visibility into AI usage across all your processes. You can track the number of AI calls made per process or task, token usage (and therefore associated costs), response times and failure rates, and confidence scores or output quality metrics when available from your models.

This monitoring data supports multiple governance objectives. For cost control, you can spot overuse patterns and identify inefficient prompt chains. For policy compliance, you can prove that AI steps were reviewed when required. For performance tuning, you can compare model outputs over time or across different vendors to optimize both cost and quality.

You can build custom dashboards that break down AI usage by business unit, region, or product line, making AI usage measurable, accountable, and auditable. When auditors ask about your AI governance, you can show them actual data rather than just policy documents.

5. Multi-agent systems, modeled with guardrails

The future of enterprise AI isn’t just about better individual models—it’s about creating systems where multiple AI agents work together to achieve complex business goals.

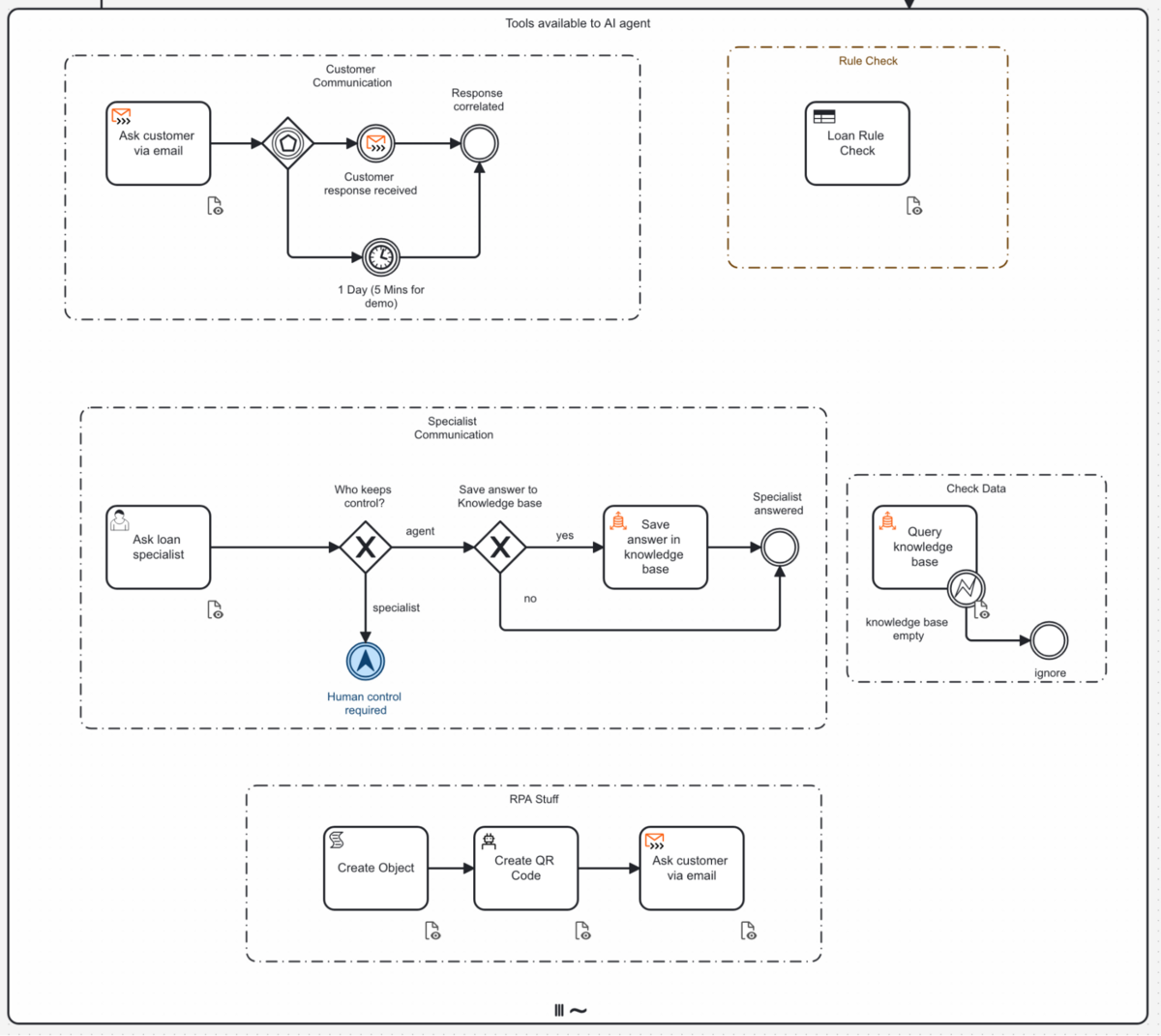

Camunda’s agentic orchestration lets you design and govern these complex systems with the same rigor you’d apply to any other business process. Each agent—whether AI, human expert, or traditional software—gets modeled as a task within a larger orchestration flow. The platform defines how agents collaborate, hand off work, escalate problems, and recover from failures.

You can design parallel agent workflows with explicit coordination logic, conditional execution paths based on agent outputs, and human involvement at any point where governance requires it. Composable confidence checks ensure work only proceeds when all agents meet minimum quality thresholds.

Here’s a concrete example: in a legal document review process, one AI agent extracts key clauses, another summarizes the document, and a human attorney provides final review. Camunda coordinates these steps, tracks outcomes, and escalates if confidence scores are low or agents disagree on their assessments.

6. Enabling explainability and traceability

One of the most challenging aspects of AI governance is explainability. When an AI system makes a decision that affects your business or customers, stakeholders want to understand how and why that decision was made—and this is often a legal requirement in regulated industries.

Modern AI models are probabilistic systems that don’t provide neat explanations for their outputs. But Camunda addresses this by creating comprehensive audit trails that capture the context and process around every AI interaction.

For every AI step, Camunda persists the inputs provided to the model, outputs generated, and all prompt metadata. Each interaction gets correlated with the exact process instance that triggered it, creating a clear chain of causation. Version control for models, prompts, and orchestration logic means you can trace any historical decision back to the exact system configuration that was in place when it was made.

Through REST APIs, event streams, and Optimize reports, you can answer complex questions about AI usage patterns and decision outcomes. When regulators ask about specific decisions, you can provide comprehensive answers about what data was used, what models were involved, what confidence levels were reported, and whether human review occurred.

Camunda as a cornerstone of process-level AI governance

AI governance is a team sport that requires coordination across multiple organizational functions. You need clear policies, compliance frameworks, technical implementation, and ongoing oversight. No single platform can address all requirements, nor should it try to.

What Camunda brings to this collaborative effort is operational enforcement of governance policies at the process level. We’re not here to define your ethics policies—we provide the technical infrastructure to ensure that whatever policies you establish actually get implemented and enforced in your production AI systems.

Camunda gives you fine-grained control over exactly how AI gets used in your business processes, complete flexibility in model and hosting choices, robust orchestration of human-in-the-loop processes, comprehensive monitoring and auditing capabilities, protection against AI-specific risks like prompt injection, and support for cost tracking and usage visibility.

You bring the policies, compliance frameworks, and business requirements—Camunda helps you enforce them at runtime, at scale, and with the visibility and control that enterprise governance demands.

If you’re looking for a way to govern AI at the process layer—to bridge the gap between governance policy and operational reality—Camunda offers the controls, insights, and flexibility you need to do it safely, confidently, and sustainably as your AI initiatives grow and evolve.

Learn more

Looking to get started today? Download our ultimate guide to AI-powered process orchestration and automation to discover how to start effectively implementing AI into your business processes quickly.

Start the discussion at forum.camunda.io