The rapid evolution of AI agents has triggered an industry-wide focus on design patterns that ensure reliability, safety, and scalability. Two major players—OpenAI and Anthropic—have each published detailed guidance on building effective AI agents. Camunda’s own approach to agentic orchestration shows how an enterprise-ready solution can embody these best practices.

Let’s take a look at how Camunda’s AI agent implementation aligns with the recommendations from OpenAI and Anthropic, and why this matters for enterprise success.

Clear task boundaries and explicit handoffs

Both Anthropic and OpenAI stress the importance of defining clear task boundaries for agents. According to Anthropic’s recommendations, ambiguity in agent responsibilities often leads to unpredictable behavior and systemic errors. OpenAI similarly highlights that agents should have narrowly scoped responsibilities to ensure predictability and reliability.

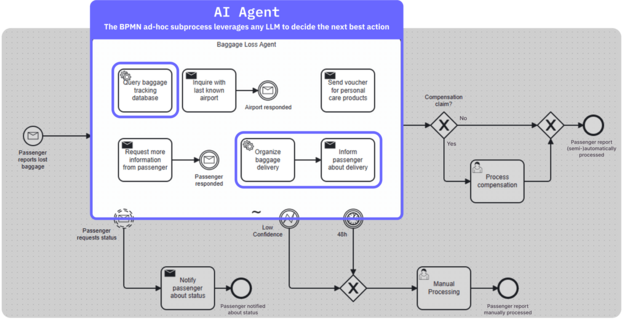

At Camunda, we address this by orchestrating agents through BPMN workflows. Each agent’s task is represented as a discrete service task with well-defined inputs and expected outputs. For example, in our example agent implementation, an email is sent only after a Generate Email Inquiry task completes its work and delivers validated output. This sequencing ensures that each agent knows precisely when to act, what data it receives, and what deliverables it is accountable for, thereby minimizing risks of cascading failures.

By visualizing these handoffs in BPMN diagrams, stakeholders across technical and nontechnical domains can easily understand the agent responsibilities, audit workflows, and troubleshoot when necessary.

Narrow scope with composable capabilities

OpenAI’s guide highlights the benefits of agents that are designed with specialized, narrow scopes, which can then be composed into larger systems for more complex tasks. Anthropic echoes this, suggesting that mega-agents often become unwieldy and hard to trust.

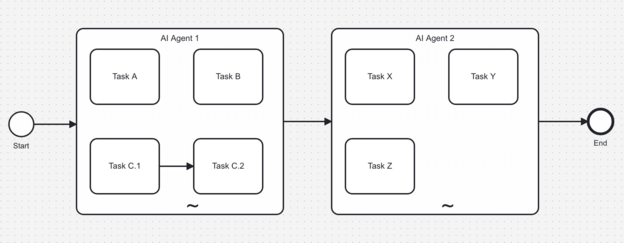

Camunda’s architecture embraces this philosophy through microservices-style orchestration. Each AI agent within Camunda focuses on mastering a single task—for instance, information retrieval, natural language generation, decision support, or classification. These specialized agents can then be strung together through BPMN models to create sophisticated end-to-end business processes.

Let’s look at a practical example.

In an insurance claims process, Camunda orchestrates a Document Extraction agent to pull key fields, a Fraud Detection agent to assess risk, and a Claims Decision agent to recommend next steps. Each agent operates independently yet collaboratively, enhancing system resilience and allowing incremental upgrades without overhauling the entire workflow.

Monitoring, error handling, and human-in-the-loop

Both OpenAI and Anthropic emphasize that no agent should operate without proper supervision mechanisms. Agents must report their states, signal when they encounter issues, and escalate gracefully to human overseers.

Camunda is particularly strong in this area thanks to our suite of tools like Operate, Optimize, and Tasklist. Here’s how we achieve enterprise-grade monitoring and human-in-the-loop design:

- Full observability: Camunda Operate provides real-time visibility into every process instance, showing exactly which agent did what, when, and with what outcome.

- Error boundaries and fallbacks: BPMN error events and boundary timers allow processes to anticipate common failures (like timeouts or bad data) and take corrective actions, such as retrying, skipping, or escalating to a human operator.

- Seamless human escalation: When agents cannot confidently complete a task—for example, due to ambiguity or ethical concerns—Camunda can dynamically activate a human task, prompting a person to step in, review, and make decisions.

In a future release—the 8.8 release scheduled for October—Camunda is taking this one step further by connecting these features directly to the agent. Failed tasks will automatically trigger the agent to reevaluate the prompt, allowing the agent to respond dynamically as the environment changes. Operate will provide real-time visibility into the agent, allowing seamless human escalation and recovery.

These capabilities ensure that agents augment rather than replace human judgment, a key principle recommended by both OpenAI and Anthropic.

Composability and reusability

Anthropic strongly recommends composable agent architectures to allow rapid iteration and minimize technical debt. Composable systems are more adaptable, easier to troubleshoot, and more cost-effective to maintain.

Camunda’s approach to process design aligns perfectly with this recommendation. Our BPMN models are built around modularity, enabling teams to:

- Swap out individual agents without rewriting the entire workflow

- Reuse standard subprocesses across different projects

- Version-control agent behaviors separately, making it easy to A/B test and roll back changes

Drawing from IBM’s insights on agent design, Camunda’s platform allows enterprises to build libraries of reusable agent modules. These can be assembled like building blocks to rapidly create new processes or modify existing ones, significantly accelerating innovation cycles.

Transparent orchestration and explainability

OpenAI’s guide makes it clear: trustworthy AI systems must provide explainable decision pathways. Stakeholders need to understand why an agent acted a certain way, especially when decisions have legal, ethical, or financial consequences.

Camunda’s BPMN-driven orchestration inherently provides this transparency. Every agent interaction, every decision point, and every data handoff is visually modeled and logged. Teams can:

- Trace the complete lineage of a decision from input to output

- Generate audit trails automatically for compliance needs

- Explain system behavior to both technical audiences and nontechnical stakeholders

In highly regulated industries like banking, healthcare, or insurance, this kind of transparency isn’t just a nice-to-have—it’s a nonnegotiable requirement. With Camunda, organizations can meet these standards confidently.

Centralized orchestration provides guardrails

Today, AI agents do not yet exhibit the level of trustworthiness, transparency, or security required to make a fully autonomous swarm of agents safe for enterprise contexts. In decentralized models, agents independently delegate tasks to one another, which can lead to a lack of oversight, unpredictable behavior, and challenges in ensuring compliance.

At Camunda, we believe that the decentralized agent pattern represents an exciting vision for the future. However, we see it as a pattern that is still years away from being viable for enterprise-grade AI systems.

For now, Camunda strongly supports centralized or manager patterns. With this approach, a single orchestrator (in Camunda’s case, the BPMN engine) manages when, why, and how agents act. This centralized orchestration ensures:

- Full visibility into agent activities

- Clear accountability for decision points

- Easier implementation of security, compliance, and auditing mechanisms

Our philosophy is simple: while the future may hold promise for decentralized agent ecosystems, today’s enterprises need reliability, explainability, and control. Centralized orchestration, powered by Camunda, offers the safest and most effective path forward that you can utilize immediately, without sacrificing your flexibility for improvements and innovations in AI that may come in the future.

Enterprise-grade agentic orchestration is here!

By closely adhering to the industry best practices, Camunda delivers an enterprise-ready framework for building, deploying, and scaling AI agents. Our approach balances automation with control, speed with safety, and complexity with clarity.

We believe that AI agents should operate transparently, predictably, and with human-centric governance. With Camunda, enterprises gain not just a platform but a reliable foundation to scale AI responsibly and sustainably.

Want to learn more? Dive into our latest release announcement or check out our guide on building your first AI agent.

Stay tuned—the future of responsible, scalable AI is being built right now, and Camunda is at the forefront.

Start the discussion at forum.camunda.io